Introduction:

IR Technology

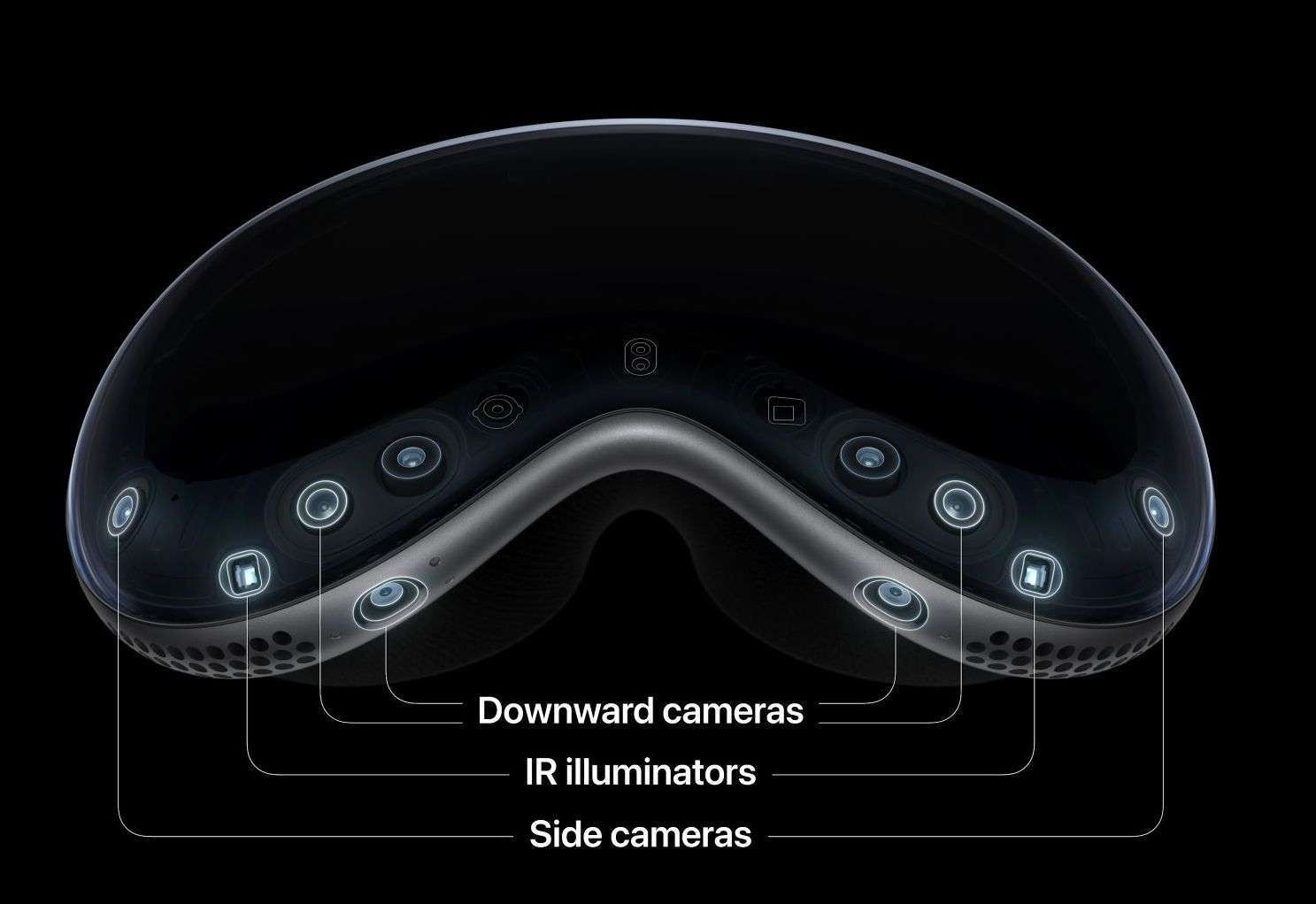

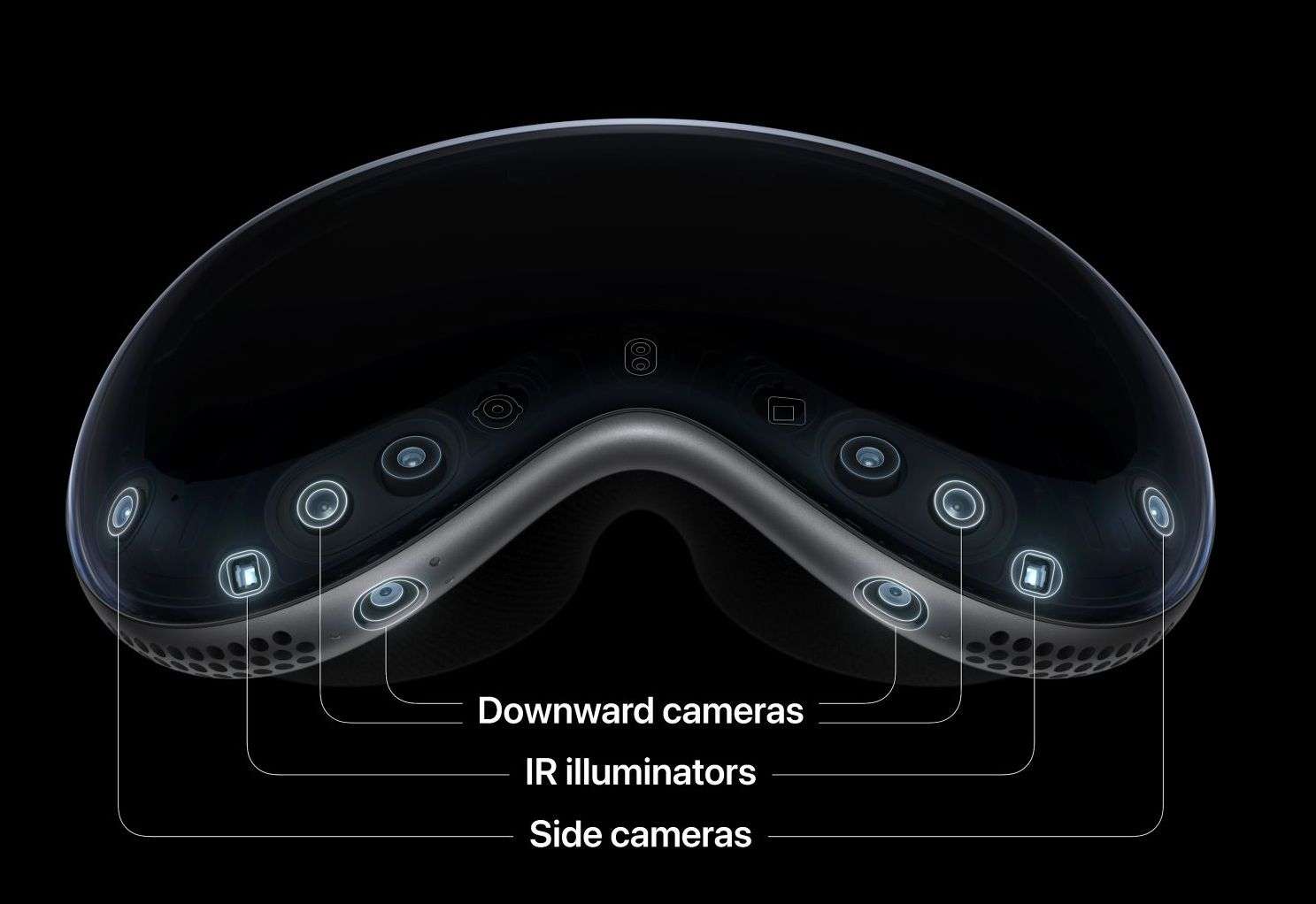

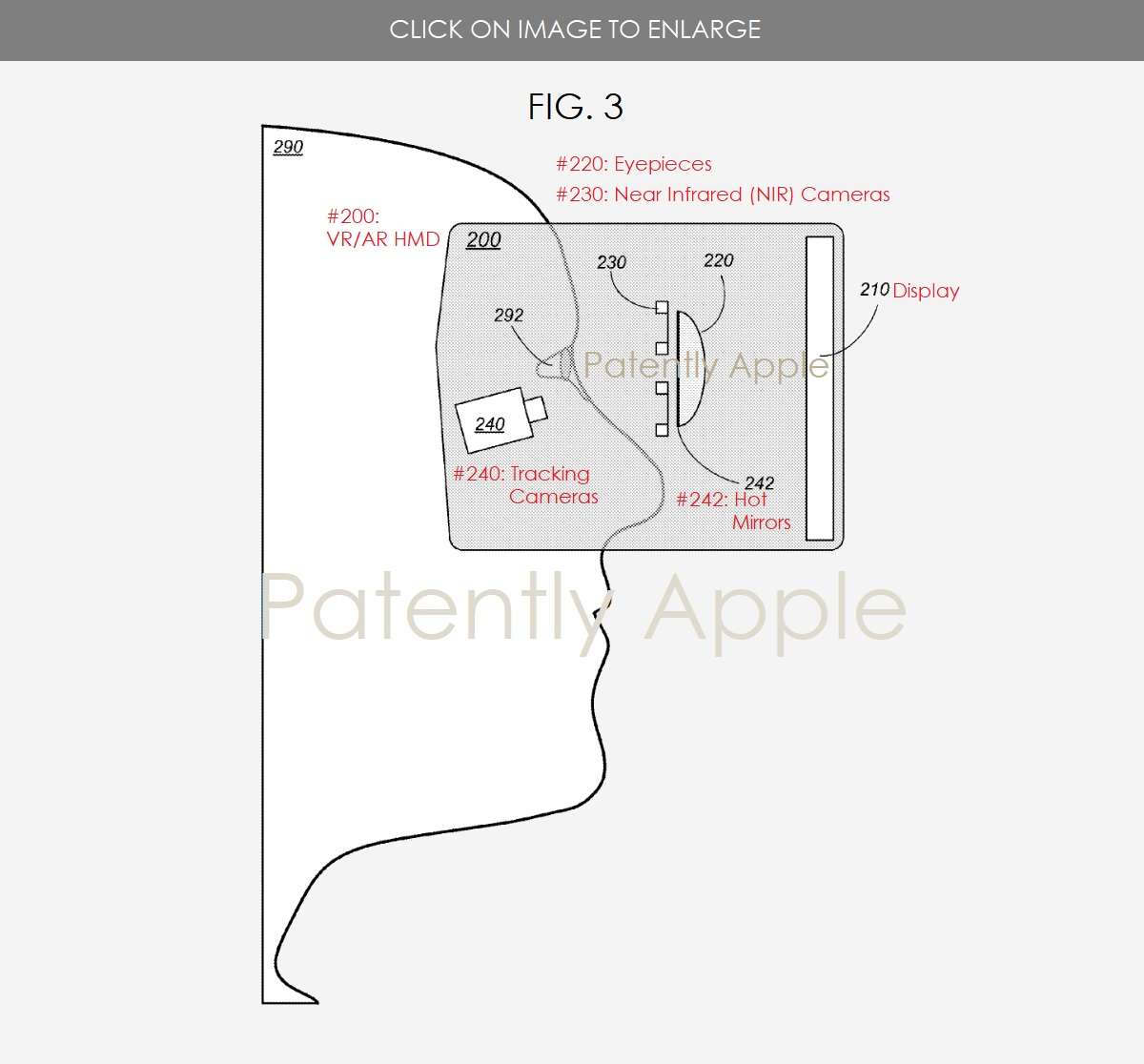

Close-up image of the advanced Infrared (IR) sensor technology used in Apple’s Vision Pro, showcasing the innovative use of IR in eye and hand tracking for immersive mixed reality experiences.

Learn more

In a landmark technological leap, Apple has unveiled its groundbreaking AR/VR headset, the Apple Vision Pro, thereby ushering in a new era of spatial computing. While this state-of-the-art device boasts an array of innovative features, it’s the integration of Infrared (IR) technology for unprecedented eye tracking capabilities that truly distinguishes it from the rest. This blog post will provide an in-depth exploration of how Apple’s implementation of IR technology is poised to revolutionize the industry.

A New Dawn in Spatial Computing:

“Today marks the beginning of a new era for computing,” announced Tim Cook, Apple’s CEO. “Just as the Mac introduced us to personal computing, and iPhone introduced us to mobile computing, Apple Vision Pro introduces us to spatial computing. Built upon decades of Apple innovation, Vision Pro is years ahead and unlike anything created before — with a revolutionary new input system and thousands of groundbreaking innovations. It unlocks incredible experiences for our users and exciting new opportunities for our developers.”

The Science of Infrared Sensors:

Infrared (IR) sensors are electronic devices that measure and detect IR radiation, an invisible radiant energy that is part of the electromagnetic spectrum. They are commonly used in a variety of applications, including heat sensing, night vision, and tracking. The basis of an IR sensor operation revolves around the principle that every object that isn’t at absolute zero temperature will emit IR radiation.

There are two types of IR sensors – active and passive. Active IR sensors operate by emitting IR radiation and then measuring the radiation that is reflected back from an object. Passive IR sensors, on the other hand, only measure the IR radiation emitted by an object itself.

Working Principle of IR Sensors:

An IR sensor usually consists of an IR LED (emitter) and an IR photodiode (detector). The IR LED emits light at IR frequency, and the photodiode senses the IR light and converts it into electrical signals.

When the emitted IR radiation strikes an object, some of the radiation is absorbed and some is reflected back. The reflected radiation is detected by the IR photodiode. As the amount of reflected IR radiation changes (due to eye or hand movements, for instance), the amount of current flowing through the photodiode changes, which can be measured and used as an indication of movement.

Eye Tracking with IR Sensors:

The use of IR sensors for eye tracking, as seen in Apple Vision Pro, is an innovative application of this technology. In this system, the IR illuminators act as active IR sensors that emit NIR light towards the user’s eyes. The hot mirrors located at or near the eye-facing surfaces of the eyepieces reflect at least a portion of the NIR light while allowing visible light to pass.

The NIR cameras, located at or near the user’s cheekbones, act as passive IR sensors, capturing the reflected NIR light off the user’s eyes. These images captured by the NIR cameras are then analyzed to detect position and movements of the user’s eyes.

The precise tracking of the user’s gaze direction and the lightning-fast latency (12ms in Vision Pro’s case) are achieved by integrating this IR technology with sophisticated image processing algorithms and powerful processing chips like Apple’s R1 chip. The R1 chip handles the real-time processing of the vast amounts of data captured by the IR sensors and cameras, enabling instantaneous responses to the user’s gaze direction and eye movements.

IR Sensors in Hand Tracking:

In addition to eye tracking, IR sensors are also used in Vision Pro for hand tracking. This is achieved through the use of IR cameras that emit IR light and capture the light reflected off the user’s hands. The changes in the reflected light are used to detect the movements and positions of the user’s hands, allowing for intuitive gesture-based interactions with the mixed reality environment.

The incorporation of IR sensors into Apple’s Vision Pro headset marks a significant step forward in the development of spatial computing technology. By using IR sensors to accurately track the movements of the user’s eyes and hands, Apple is pushing the boundaries of what is possible in AR/VR technology, providing users with a truly immersive and intuitive mixed reality experience.

More on Apple’s Innovative Infrared Eye Tracking System:

Apple’s infrared eye tracking system is truly a marvel of modern engineering. It comprises an array of carefully coordinated components, including hot mirrors, near infrared (NIR) cameras, and illuminators, all of which work together to deliver a seamless and intuitive interaction experience.

- The Hot Mirrors: The hot mirrors are strategically positioned at or near the eye-facing surfaces of the Vision Pro eyepieces. Their role is to reflect infrared light while allowing visible light to pass, thereby acting as a kind of ‘window’ between the user’s eyes and the NIR cameras. This positioning allows the NIR cameras, which are located at the sides of the user’s face near the cheekbones, to capture precise images of the user’s eyes without having to image through the eyepieces.

- The Near Infrared Cameras: The NIR cameras are designed to work optimally with NIR light, which is invisible to the human eye but highly reflective. These cameras capture images of the user’s eyes as they are reflected in the hot mirrors. As the user’s gaze shifts, these images change, and this data is used to track the user’s point of gaze and eye movements in real time.

- The Illuminators: The illuminators are essentially a source of NIR light. They illuminate the user’s eyes, and a portion of this light is reflected back to the hot mirrors and subsequently captured by the NIR cameras. This process of illumination, reflection, and image capture is how the eye tracking system is able to detect and analyze the position and movements of the user’s eyes so accurately.

Together, these components form a sophisticated eye tracking system that can detect subtle changes in the user’s gaze, enabling gaze-based interaction with the content displayed on the near-eye display of the Vision Pro. In other words, where the user looks, the headset ‘sees’ and responds accordingly.

The integration of this advanced eye tracking technology into the Vision Pro headset represents a quantum leap in the world of spatial computing. By offering a natural, intuitive, and efficient means of interaction, Apple’s IR eye tracking technology is poised to revolutionize not just the virtual and augmented reality industry, but also the broader landscape of digital interaction.

The potential applications are extensive and diverse, ranging from gaming, education, and entertainment to accessibility and customization. As we eagerly await the release of the Apple Vision Pro, the promise of this exciting technology holds limitless potential.

How Apple’s IR Technology Revolutionizes User Interaction:

Apple’s IR eye tracking offers an immersive and highly personalized interaction experience. The system allows the user to select and interact with virtual objects by merely looking at them and using hand gestures. It’s not only the realm of entertainment, such as movies or live sports events, that stands to be transformed but also everyday computing tasks like web browsing, messaging, and FaceTime. This powerful technology thus blurs the line between the physical and digital worlds, offering an enriched, all-encompassing, immersive computing experience.

This technology is not brand new and there have been other companies using IR, NIR, and IR illumination technology before. Here are a few of the previous headsets that implement Infrared eye tracking systems compared with the new apple pro.

Compare and Contrast Previous IR Sensor Systems

In terms of the use of IR sensors and the response time of the sensors, let’s compare Apple’s Vision Pro with its notable predecessors:

- Apple Vision Pro vs. Microsoft HoloLens 2: HoloLens 2 uses NIR light sources and sensors to illuminate and track the user’s eyes. However, it appears that Apple’s headset has managed to leap ahead in terms of performance. Apple’s Vision Pro claims a latency of only 12ms, which Apple states is eight times faster than the blink of an eye. While Microsoft has not publicly stated the exact latency of the HoloLens 2’s eye-tracking system, real-world feedback suggests that Apple’s system is significantly faster and more precise.

- Apple Vision Pro vs. Tobii Pro Glasses 2: Tobii Pro Glasses 2 is designed for a different market, primarily research and user experience testing. They use infrared sensors for accurate gaze direction and pupil movement tracking. On the other hand, Apple’s Vision Pro not only tracks the eyes’ movement and gaze direction but also allows for interaction within a mixed reality environment, offers external tracking, and operates with incredibly low latency. This highlights Apple’s more advanced and application-rich use of IR technology in its headset.

- Apple Vision Pro vs. Varjo VR: Varjo’s VR headset integrates robust eye-tracking technology using IR illuminators and cameras, but it mainly focuses on the professional and enterprise market. Varjo has not disclosed the exact latency of its eye-tracking system. However, Apple’s Vision Pro, with its 12ms latency and the incorporation of the advanced R1 chip, suggests a faster, more efficient processing of eye-tracking data. Furthermore, the Vision Pro’s use of IR technology extends beyond just eye-tracking to include environmental mapping and hand-tracking.

- Apple Vision Pro vs. FOVE VR: FOVE VR employs small infrared cameras to track the direction of the user’s gaze. While this was a unique and innovative feature, FOVE has not released detailed technical specifications regarding the latency of their eye-tracking system. In comparison, Apple’s Vision Pro offers an overall more comprehensive package, with its eye-tracking system contributing to an array of features from performance optimization to accessibility.

IR sensor technology and quick response times are not new in the AR/VR industry, the degree of integration, the low latency, and the wide-ranging applications of these technologies in Apple’s Vision Pro appear to set a new standard for future developments. Apple’s use of IR sensors in a dual role, both internally for eye tracking and externally for environmental sensing, indicates a more advanced and versatile implementation compared to earlier devices.

Enabling Efficient Resource Allocation:

Apple Vision Pro’s precise eye-tracking capabilities also promise efficient resource allocation. By knowing precisely where the user’s gaze is directed, the headset can intelligently manage computational resources. This dynamic rendering results in high-quality visuals where the user is looking, while optimizing resources for peripheral regions.

Empowering Accessibility:

The advanced IR eye tracking technology is an exceptional stride towards inclusivity. It promises a future where individuals with mobility impairments can navigate virtual worlds effortlessly. The technology also holds the potential to support gaze-based assistive technologies, making digital content more accessible to people with disabilities.

The Apple Vision Pro – Availability and Pricing:

Starting at $3,499 (U.S.), the Apple Vision Pro will be available early next year on apple.com and at Apple Store locations in the U.S., with more countries coming later next year. Customers can learn about, experience, and personalize their fit for Vision Pro at Apple Store locations.

Catch up on the Apple Vision Pro spatial computer with their keynote video.

Conclusion:

Apple’s integration of IR eye-tracking technology in its Vision Pro headset marks a significant turning point in spatial computing. By pioneering this breakthrough technology, Apple not only enhances the overall user experience but also sets the stage for a more inclusive and personalized digital world. The industry awaits with bated breath to experience the transformative potential of this remarkable innovation.